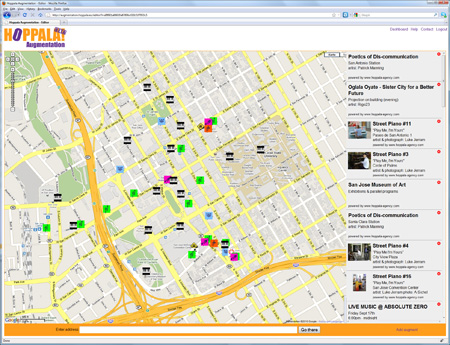

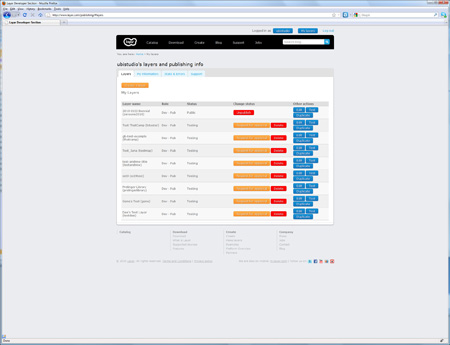

In collaboration with Adriano @Farano, I’ve been experimenting with creating historical experiences in augmented reality. Adriano’s on a Knight Fellowship at Stanford, and he’s seeking to push the boundaries of journalism using AR; my focus is developing new approaches to experience design for blended physical/digital storytelling, so our interests turn out to be nicely complementary. This is also perfectly aligned with the goals of @ubistudio, to explore ubiquitous media and the world-as-platform through hands-on learning and doing.

Adriano’s post about our first playtesting session, Rapid prototyping in Stanford’s Main Quad, included this image:

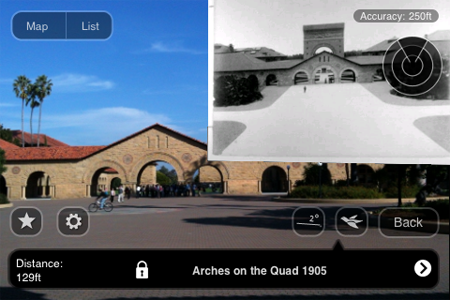

Taken from the interior of the Quad looking toward the Oval and Palm Drive, you can see that the photo aligns reasonably well with the real scene. Notably, the 1905 picture reveals a large arch in the background, which no longer stands today. We later found out this was Memorial Arch, which was severely damaged in the great 1906 earthquake and subsequently demolished.

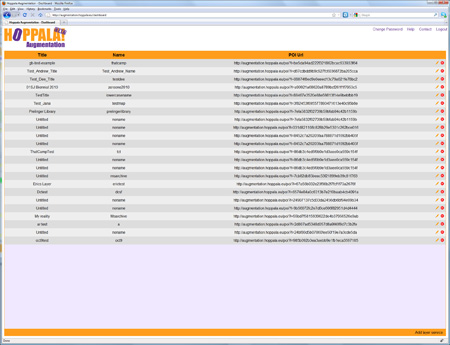

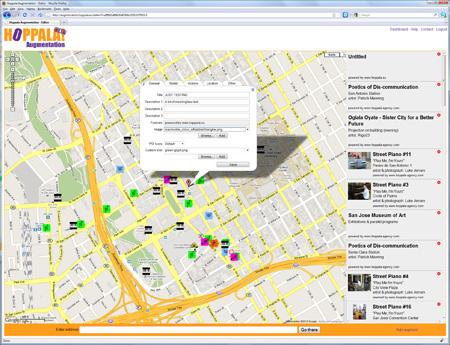

In our second playtesting session, we continued to experiment with historical images of the Quad using Layar, Hoppala and my iPhone 3Gs as our testbed. Photos were courtesy of the Stanford Archives. This view is from the front entrance to the Quad near the Oval, looking back toward the Quad. Here you can see the aforementioned Memorial Arch in 1906, now showing heavy damage from the earthquake. The short square structure on the right in the present day view is actually the right base of the arch, now capped with Stanford’s signature red tile roof.

In this screencap, Arches on the Quad 1905 is showing as the currently selected POI, even though the photo is part of a different POI.

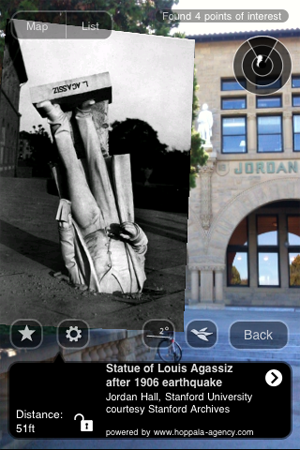

One of the more famous images from post-earthquake Stanford is this one, the statue of Louis Agassiz embedded in a walkway:

Although the image is scaled a bit too large to see the background well, you can make out that we are in front of Jordan Hall; the white statue mounted above the archway on the left is in fact the same one that is shown in the 1906 photo, nearly undamaged and restored to its original perch.

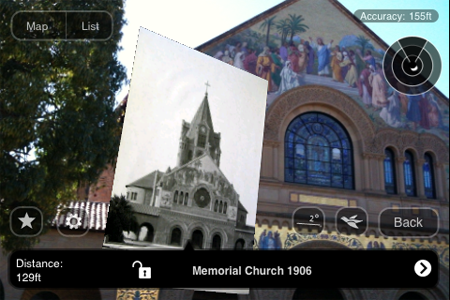

Finally we have this pairing of Memorial Church in 2010 with its 1906 image. In the photo, you can see the huge bell tower that once crowned Mem Chu; this was also later destroyed in the earthquake.

Each of these images conveys some idea of the potential we see in using AR to tell engaging stories about the world. The similarities and differences seen over the distance of a century are striking, and begin to approach what Reid et al defined as “magic moments” of connection between the virtual and the real [Magic moments in situated mediascapes, pdf]. However, there are many problematic aspects of today’s mobile AR experience that impose significant barriers to reaching those compelling moments. And so, the experiments continue…