At yesterday’s Facebook press event launching new mobile features, Mark Zuckerberg stirred up a minor tempest in the pundit-o-sphere. When asked about when there would be a Facebook mobile app for the iPad, he responded glibly:

At yesterday’s Facebook press event launching new mobile features, Mark Zuckerberg stirred up a minor tempest in the pundit-o-sphere. When asked about when there would be a Facebook mobile app for the iPad, he responded glibly:

“iPad’s not mobile. Next question.” [laughter] “It’s not mobile, it’s a computer.” (watch the video)

This of course spawned dozens of blog posts and hundreds of tweets, basically saying either “he’s nuts!” or “validates what I’ve said all along.” And in this post-PC connected world, it’s interesting that Zuckerberg sees a distinction between mobiles and computers. But as you might imagine, I have a somewhat different take on this: We need to stop thinking that ‘mobile’ is defined by boxes.

Boxes vs. Humans

The entire mobile industry is built around the idea that boxes — handsets, tablets, netbooks, e-readers and so on — are the defining entity of mobility. Apps run on boxes. Content is formatted and licensed for boxes. Websites are (sometimes) box-aware. Network services are provisioned and paid for on a box-by-box basis. And of course, we happily buy the latest shiny boxes from the box makers, so that we can carry them with us everywhere we go.

And there’s the thing: boxes aren’t mobile. Until we pick them up and take them with us, they just sit there. Mobility is not a fundamental property of devices; mobility is a fundamental property of us. We humans are what’s mobile — we walk, run, drive and fly, moving through space and also through the contexts of our lives. We live in houses and apartments and favelas, we go to offices and shops and cities and the wilderness, and we pass through interstitial spaces like airports and highways and bus stations. Humans are mobile; you know this intuitively. We move fluidly through the physical and social contexts of our lives, but our boxes are little silos of identity, apps, services and data, and our apps are even smaller silos inside the boxes. Closed, box-centric systems are the dominant model of the mobile industry, and this is only getting worse in the exploding diversity of the embedded, embodied, connected world.

So why doesn’t our technology support human-centered mobility?

One big reason is, it’s a hard problem to solve. Or rather, it’s a collection of hard problems that interact. A platform for human-centered mobility might have the following dimensions:

* Personal identity, credentials, access rights, context store, data store, and services you provide, independent of devices. Something like a mash-up of Facebook profiles, Connect, Groups, and Apps with Skype, Evernote and Dropbox, for example.

* Device & object identities, credentials, rights, relationships, data & services; a Facebook of Things, if you will.

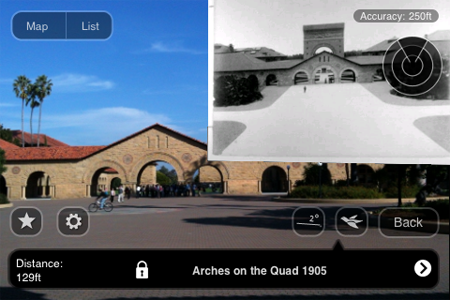

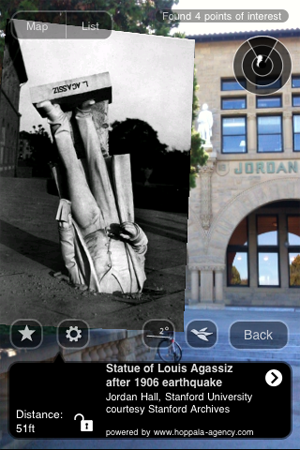

* Place identities, credentials, rights, relationships, data & services. Think of this as a Future Foursquare that provides place-based services to the people and objects within.

* Device & service interaction models, such that devices you are carrying could discover and interact with your other devices/services, other people’s devices/services, and public devices/services in the local environment. For example, your iPod could spontaneously discover and act as a remote controller for your friend’s connected social TV when you are at her house, but your tweets sent via her TV would originate from your own account.

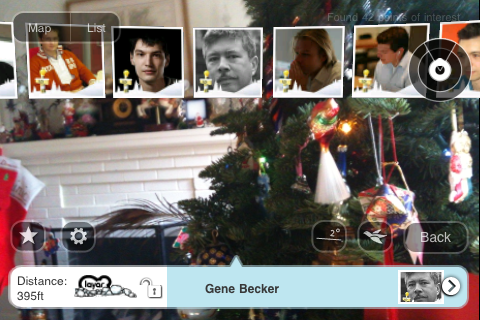

* Physical context models that provide raw sensor data (location, motion, time, temperature, biometrics, physiological data etc) and outputs of sensor fusion algorithms (“Gene’s phone is in his pocket [p=75%] in his car [p=100%] on Hwy 101 [p=95%] in Palo Alto, CA USA [p=98%] at 19.05 UTC [p=97%] on 02010 Nov 4 [p=100%]”).

* Social context models that map individuals and groups based on their relationships and memberships in various communities. Personal, family, friendship, professional and public spheres, is one way to think of this.

Each of these is a complex and difficult problem in its own right, and while we can see signs of progress, it is going to take quite a few years for much of this to reach maturity.

The second big reason is, human-centered mobility is not in the interests of the mobile boxes industry. The box makers are battling for architectural control, for developer mindshare, for unique and distinctive end user experiences. Network operators are fighting for subscribers, for value added services, for regulatory relaxation. Mobile content creators are fighting for their lives. And everyone is fighting for advertising revenue. Interoperability, open standards, and sharing data across devices, apps and services are given lip service, but only just barely. Nice ideas, but not good for business.

So here’s a closing thought for you. Maybe human-centered mobility won’t come from the mobile industry at all. Maybe, despite his kidding about the iPad, it will come from Mark Zuckerberg. Â Maybe Facebook will be the mobile platform that transcends boxes and puts humans at the center. Wouldn’t that be interesting?

I have a bit of news: I’m joining

I have a bit of news: I’m joining

At yesterday’s Facebook press event launching new mobile features, Mark Zuckerberg stirred up a minor tempest in the pundit-o-sphere. When asked about when there would be a Facebook mobile app for the iPad, he responded glibly:

At yesterday’s Facebook press event launching new mobile features, Mark Zuckerberg stirred up a minor tempest in the pundit-o-sphere. When asked about when there would be a Facebook mobile app for the iPad, he responded glibly: